Meaningful GPU Instanced Rendering

What is GPU Instancing?

GPU-instanced rendering allows us to render thousands of the same mesh in a single draw-call. It does this by reading per-instance data out of compute buffers we set up on the GPU. This makes it perfect for rendering thousands of scattered objects over large terrains. We are still limited by our GPU’s ability to compute, so tri-count & shader complexity still need to be managed carefully.

GPU instancing sounds great. There are a few caveats here though :

It will not handle even basic frustum or distance culling. Anything not on screen will be clipped before rasterization, but even clipping those triangles can be extremely expensive. We need to handle this ourselves before the rasterization step.

We are no longer using GameObjects, so cannot attach collision or other behavior to the objects we’re rendering. Standard LODs are also out, so we need to handle that ourselves too.

To take real advantage of this rendering technique we need hundreds of thousands of meaningful transforms. Hand-placing that much content is out of the question, so we need a way to procedurally generate object placement. For something like grass over a flat plane, this is fairly trivial, but Sons of Ryke is a vertically complex game with overhangs & cavities. It’s also procedurally streamed in, so our approach needs to be modular.

Generating Placement Data

To render our objects we’re going to start with an area & a density value. We can then fill that area with an even spacing of the object we want to render based on that density value. Simple enough.

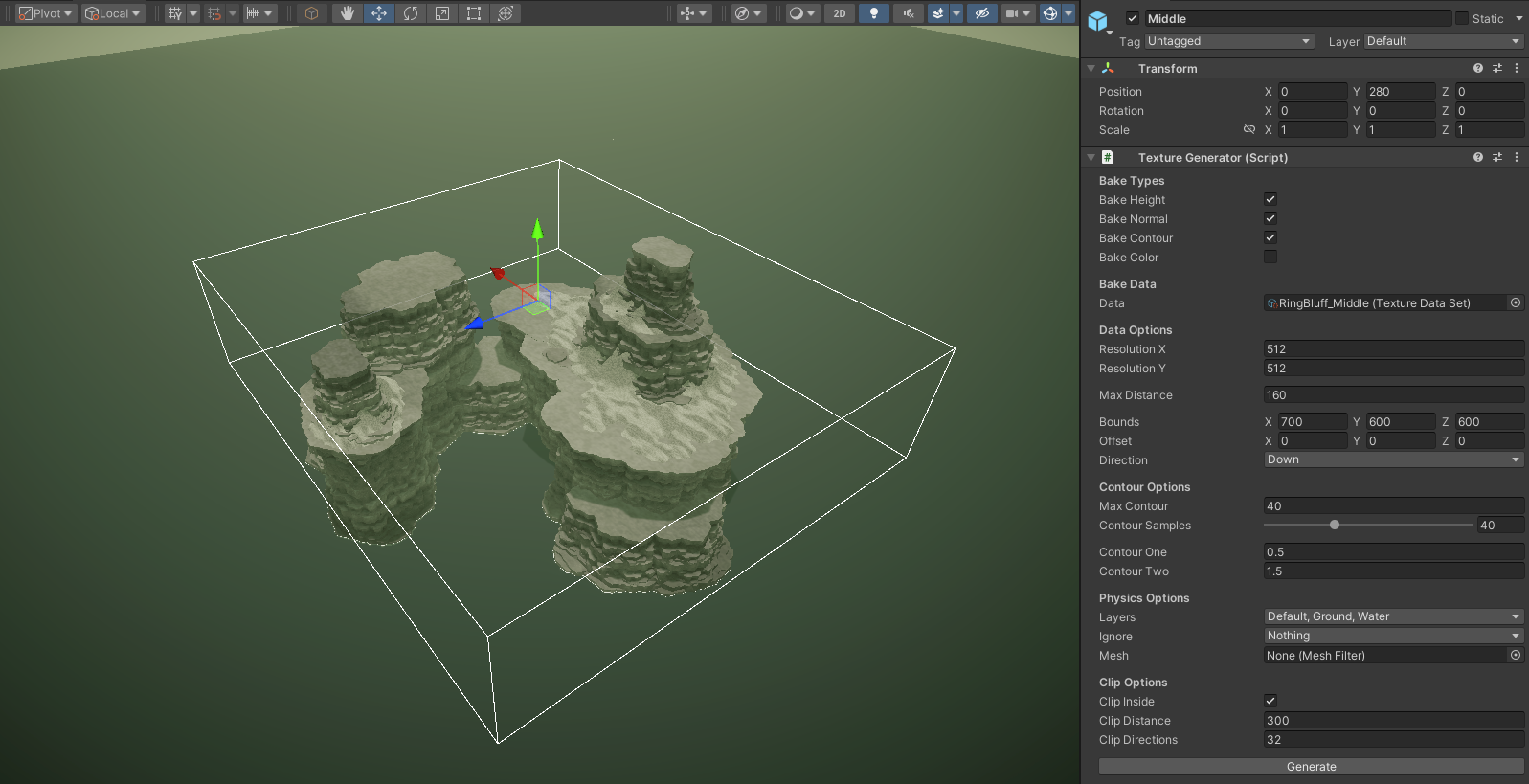

So now we have a uniform grid of objects floating mid-air. Not much use. Our first task is to get them to sit nicely over complex & sloping terrain. We could raycast down at each object position to find the height of the terrain, but we want to be able to perform this generation step at run-time & this is incredibly expensive, especially with tens of thousands of objects. Instead, we’re going to bake that height data into a texture & sample it during the run-time generation step. For this, we have the Texture Generator component.

The Texture Generator bakes height, normal, contour & even color data into textures that can be sampled & used in various ways in the generation step.

The Generation Step

The generation step takes our uniform grid of objects & turns them into a set of natural, pseudo-random-looking placements that conform to the terrain. We do this using an artist-friendly modifier stack.

Modifiers operate on every instance & are super easy to add. Generally speaking, they do one of four things:

Adjust the position, rotation, or scale of the instance.

Clip the instance (Remove it from the renderer).

Adjust per-instance properties to be sampled in shader.

Select a specific “Genus“ to use (Explained below).

The “Area Renderer” component is responsible for the rendering of our instances. It’s here modifiers can be added, removed & adjusted in the Renderers inspector. To give you a few examples of modifiers -

The “Texture Sample” modifier sets the height of an object to the terrain, or clips an object if no terrain is found. It can also rotate the object to match the slope of the terrain or sample the terrain color to blend the objects with the terrain.

The “Perlin Clip“ & “Perlin Scale“ modifiers clip/scale objects based on multiple octaves of perlin noise. This produces pseudo-random, natural-looking distribution.

The “Random Offset” displaces instances within a set range on each axis. This helps break up the original uniform grid placement.

Now we're performing this step at run-time as the world is streamed in, so it needs to be incredibly quick & efficient. Burst-powered jobs are perfect for this. They can perform the generation step in a background thread without interrupting gameplay. Each modifier in the stack acts as a single step in a chained sequence of burst jobs. The output is then passed into a compute buffer & loaded onto the GPU.

In Sons of Ryke, this full generation step is happening a few thousand times per minute as the player moves through the world & new nodes are streamed in & out. Without burst, this simply wouldn’t be possible.

Putting It All Together

LODing & Culling

Passing data between the CPU-GPU is expensive, so we want to limit this as much as possible. Once we have our data buffers on the GPU we want to leave them alone.

LODing & culling is performed on the GPU using compute shaders. All the data we need is already on the GPU, so it’s fairly trivial to pass the camera’s position & frustum data in & performs some distance/frustum checks.

As each instance is evaluated its index is added into 1 of 4 compute buffers pertaining to the LOD it will be rendered in. Anything culled is simply left out of these 4 buffers.

We then pass these buffers, alongside the specified LOD’s mesh & material, to GPU to render that specific LOD. The shader uses these indexes to access the original data & draw the object in the correct position with any additional per-instance data. This does mean we’re paying an extra draw-call per LOD level, so they should be used sparingly.

Randomizing our Genus

When scattering objects, we generally want to pick from a few variants. If all of our scattered trees or stones are identical it looks a little off. I’ve titled these variants “Genus“. Each Genus contains its own meshes, materials & LODing information. During the generation step, we select (usually randomly) a genus index. Then, in the last stage of the generation step, we divide our data among multiple “Sub-Renderers“ based on this index. Each sub-renderer is then responsible for culling, LODing & rendering their specific Genus.

Layering Renderers

In a vertically complex environment, height-maps can only get us so far. There will be overhangs or cavities that a single height-map cannot reach. To solve this we use multiple layers of textures/renderers. In Sons of Ryke, the cliffs are divided into 80-meter layers, so overhangs can only exist every 80 meters. By layering 3 layers of Texture Generators & Area Renderers we can render underneath those overhangs & inside any cavities. We also use separate Texture Generator / Area Renderer setups to generate water flora like lily pads over water, or hanging vines on the underside of overhangs.

Pooling

Having hundreds of layers & nodes constantly stream in & out presents a new problem. We built this system to reduce drawcalls, but now we have hundreds of individual renderers each submitting their own drawcalls for their tiny section of the map.

The solution is to pool data sets together. Instead of having hundreds of smaller data sets operating & culling independently, we have 1 massive data set that we perform LODing, culling & rendering with (per Genus). As new nodes are streamed into the world they are generated & added to the data pool & as nodes are steamed out their data is removed. Constantly removing & re-adding data to the GPU can be slow & expensive, so we need to be careful here. Too few pools will produce too many drawcalls, but constantly reuploading large data buffers to the GPU will halt your frame rate.